In a recent incident, a 49-year-old woman was tragically knocked-down by an Uber self-driving vehicle, while crossing a road in Tempe, AZ, and fatally injured. It was the first pedestrian fatality by such a vehicle, and has raised a good deal of discussion about their safety.

(I updated this article with some corrections and additional information on 3/24/2018. Further updated, in light of the second Tesla Autopilot-related death on 3/23/2018.)

How Safe Are US Roads?

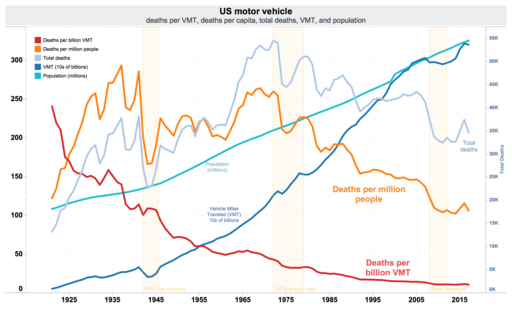

Before we look at self-driving cars, let’s consider how safe US roads are in general, right now.

According to the National Highway Traffic Safety Administration (NHTSA), there were 37,461 lives lost on our roads in 2016, the most recent year for which figures were available at the time of writing (report). That seems like a very high number, and averages over 100 per day. However, the NHTSA reckons that to be 1.18 fatalities per 100 million vehicle miles traveled (VMT). We’ll return to this number when considering the safety of self-driving vehicles.

But, that’s not the whole story. The rate at which fatalities occur, per VMT, has been trending downwards for some time:

Still, it’s clear from the NHTSA‘s 2016 figures that there are still large numbers of avoidable deaths:

- Deaths resulting from drunk-driving totaled 10,497 (28% of the total).

- Passengers not wearing seat-belts contributed to 10,428 deaths (28%).

- Driving too fast was indicated in 10,111 deaths (27%).

- Distracted driving accounts for 3,450 deaths (9%).

- Drowsiness was a factor in 802 deaths (2%).

What is the Potential for Self-Driving Car Safety?

Looking at the contributory factors for accidents in 2016 (there is, I believe, some overlap between these categories), it’s clear that a self-driving vehicle isn’t going to get drunk, drowsy or distracted, and should obey posted speed limits. (The Uber vehicle that caused the accident was apparently traveling at 38 mph in a 35 mph zone.) It’s clearly not going to be affected by road rage either.

However, we’re assuming that self-driving cars are capable of controlling a motor vehicle as well as a human. Theoretically, a car controlled by a lightning-fast computer controller should be able to reduce the thinking distance substantially when reacting to a situation, and drive better than a human.

However, the truth is that the artificial intelligence (AI) capabilities of a self-driving car still aren’t too sophisticated, at this point in time. They’re smart, sure, and they’re going to get a lot smarter as the technology matures further, but they are currently nowhere near as smart as an average human. In fact, they’re still pretty dumb. HAL 9000, Skynet and Proteus are still far off in the distant future.

But Haven’t Self-Driving Cars Completed Millions of Accident-Free Miles?

That’s an interesting question. Waymo, the company that is developing Google‘s self-driving technology, frequently claim millions of miles driven, and a very low accident rate. They also point out that most of the accidents in which their cars have been involved have not been their fault.

But that’s not the whole story:

- Self-driving cars drive at slower speeds than other vehicles. This was particularly true in the early days of testing such vehicles on public roads, leading to autonomous vehicles being given police tickets for impeding traffic flow. It’s far easier to avoid accidents at slower speeds (since there is more time to take evasive action). Any accidents that do still occur are likely to be less severe as a result.

- A University of Michigan study found that self-driving cars were roughly five times more likely to be involved in an accident than those driven by humans. Their relatively slow speeds appear to frustrate following drivers, who are then at increased likelihood of a collision while overtaking. Self-driving cars also make what humans might regard as unpredictable, or even dumb, decisions that also lead to accidents. (As a simple example, it’s likely that a self-driving car will perform a full emergency stop if a balloon, or a tumbleweed, is blown into its immediate path.)

- Self-driving cars have mostly been tested in environments that cause a minimum of surprises. The smooth clear, roads and sunny weather of California and Arizona are a far cry from the dirt roads, potholes, snow, ice and fog of the state I call home, Michigan, for example. It’s not unexpected, therefore, if they face fewer incidents than drivers across the entire US.

- The biggest concern, however, is that these miles are not fully autonomous in the strictest sense: there is a typically a human operator monitoring the vehicle, ready to take control at a moment’s notice. When the operator takes the vehicle out of autonomous mode, this is termed a disengagement. In California, self-driving car companies are required to report to the state’s DMV all disengagements that meet the definition of: a deactivation of the autonomous mode when a failure of the autonomous technology is detected or when the safe operation of the vehicle requires that the autonomous vehicle test driver disengage the autonomous mode and take immediate manual control of the vehicle. (Read Waymo‘s Autonomous Vehicle Disengagement Report for 2017.)

It’s not the accident free miles that are significant, but the accidents recorded, and the accidents that were likely avoided by disengagements, that are significant.

So How Far Can An Autonomous Vehicle Travel In-Between Disengagements?

Once again, we have the California DMV to thank for providing the numbers (for the period Dec. 1, 2016 to Nov. 30, 2017):

| Company | Automonmous VMT | Disengagements | VMT Per Disengagement |

|---|---|---|---|

| Waymo | 352,545 | 63 | 5,596.0 |

| GM Cruise | 131,676 | 105 | 1,254.1 |

| Nissan | 5,007 | 24 | 208.6 |

| Zoox | 2,244 | 14 | 160.3 |

| Drive.ai | 6,015 | 92 | 65.4 |

| Baidu | 1,949 | 43 | 45.3 |

| Telenav | 1,581 | 52 | 30.4 |

| Aptiv | 1,811 | 81 | 22.4 |

| Nvidia | 505 | 109 | 4.6 |

| Valeo | 552 | 212 | 2.6 |

| Bosch | 2,041 | 1,196 | 1.7 |

| Mercedes-Benz | 1,088 | 843 | 1.3 |

| Overall | 507,014 | 2,834 | 178.9 |

Yes. You read those numbers right.

Clearly, a lot depends upon which company’s self-driving car technology you’re talking about. Waymo has the best numbers, but even they can’t yet average over 6,000 miles in-between safety-related disengagements. At the other end of the scale, the technologically-competent Mercedes-Benz can’t even achieve an average of 1½ miles! That said, these numbers are clearly improving over time, but they all still have some way to go.

An industry average of just 178.9 miles in-between safety interventions doesn’t fill me with confidence that they are ready—or even close to—prime time use.

Ironically, it should not be forgotten that it is we humans who are responsible for reducing the rate of accidents of autonomous vehicles—the self-driving cars can’t yet be trusted to operate without them. (Even so, a possible contributory factor in the Uber death is that the human operator didn’t appear to be watching the road leading up to the accident.) It should also be noted that, unlike California, Arizona does not require self-driving car companies to report safety-related disengagements. Clearly, this makes it harder to assess Uber‘s performance in relation to other companies.

To my knowledge, there have been three total fatalities resulting from self-driving cars to date (the other two being incidents with Tesla vehicles operating in the inappropriately-named Autopilot mode, in May 2016 and on 23rd March, 2018—coincidentally, the same day that this article was originally published). I would estimate that there have been less than 10 million total autonomous VMT in the US. Assuming that number of miles, the fatality rate would be equivalent equivalent to roughly 30 deaths per 100 million VMT, at (generally) slow speeds, on good roads in good conditions and with a human monitoring safety. Human drivers still win with the much lower—but still far too high—number of 1.18 per 100 million VMT (see above).

What Other Problems are There?

I once spent two years working for robotic warehouse start-up, Kiva Systems—who have since been acquired by Amazon and are now known as Amazon Robotics. Kiva employed some very smart people (out of a workforce of around 120 at the time I was there, 17 people had Ph.D.s). And yet, whenever there was a choice between a simple, straightforward yet sub-optimal solution and a highly complex, provably optimal alternative, the latter always won out. Why? Because (a) it was more challenging, and the intellectual rewards for even figuring out an optimal solution were far greater, and (b) it was assumed the system’s performance would improve as a result.

But did it help us? Not in my opinion. Often, the optimality was under unrealistic, purely theoretical conditions. For example, in a warehouse with a single robot, a particular solution would be provably optimal—but it would not be close to optimal if there were 100 robots competing for resources. The upshot was that we had a system that exhibited emergent behavior that was way beyond human intuition. If we changed the configuration, or the operating rules, the results were wholly unpredictable. Even then, a small change in, say, the pattern of orders placed on the warehouse might result in radically different system performance. In effect, we made our jobs harder, and wasted time introducing and fixing some very sophisticated bugs, in a system that was crying out for simplification.

The tendency of smart people—and particularly those with a background in academic research—to adopt complex solutions over simple ones is very evident in the self-driving car industry, too.

Let’s take, as a very specific technical problem, just one of the many thousands that these systems have to solve: recognition of traffic light signals. Nearly all of these companies are busily trying to develop solutions based upon cameras and image-processing logic. There’s a new patent being filed for one or another such approach seemingly every minute. On the face of it, the problem is a simple one: detect traffic signals and obey them. However, whatever solution is adopted, it must work reliably at or very, very close to 100% of the time. If not, cars are going to be sitting at green lights driving regular road users crazy, or—and clearly far more dangerously—running red lights. Indeed, one Uber vehicle ran a red light in San Francisco in 2016, resulting in their vehicles being taken off the road for a time. The cost of making just one mistake can easily result in deaths and serious injuries.

So, say you have designed a system that can recognize traffic lights and inform the rest of the system which routes are available. Let’s consider some of the edge cases:

- How does it react if the sun is directly behind the traffic light? Can it still read the signal correctly and accurately?

- Can it handle a failed bulb? (That is, can it recognize the outline of a traffic signal and determine that none of the lights are illuminated? Can it identify which bulb has failed and react accordingly?)

- Can it handle power outages? (This is similar to the bulb failure scenario, but has different semantics, because—in the US—such intersections become 4-way stops. Does it recognize the intersection and react accordingly?)

- How does it handle a situation in which it is following a high vehicle that is obscuring the traffic signals?

- Can it differentiate an inoperative traffic signal (one that is temporarily not in use due to road work, etc.) from one with a failed bulb?

- Can it find and read an associated “No Turn on Red” sign? Can it further read and understand the times and days at which it can or cannot turn on red? Similarly, can it recognize “No Left Turn” signs.

- Can it reliably tell when it is in a state and/or country that forbids turning on red?

- Can it differentiate the “Left Turn Lane” signal, and the “Right Turn Lane” signal from the other signals?

- Can it differentiate a true traffic signal from a fake one placed at the intersection by someone trying to hack the intersection?

- Etc.

I hope I’ve conveyed a realization that this problem is a lot more complex than it first appeared…

But, in any case, this is madness!

There is a far simpler solution: have intersections broadcast an electronic signal (with authentication) that reports its state. Any autonomous vehicle could read, authenticate, process and act upon that signal very simply, and far more reliably. (Clearly, the intersection would need a reliable power source to be able to continue broadcasting during the all-too-frequent power outages that the US suffers from.)

The same is true of many of the other obstacles that autonomous vehicles have to overcome, such as recognizing emergency vehicles, reading street signs, anticipating other vehicle’s actions, etc.

All that is needed is some standards.

Why Don’t Self-Driving Car Companies Cooperate Rather Than Compete?

Firstly, they all want to establish valuable patent portfolios so that they can secure royalties, or protection against IP suits, from their competitors. Secondly, they don’t like the idea of assisting new entries to the market, reducing the return on their research investments. Thirdly, they would necessarily lose control and influence in the industry.

But this, again, is complete madness!

A far better approach—which would be required for the simple traffic light solution outlined above—would be to establish a centralized, national (or international) standards organization to to take the lead. Such an organization would then immediately render complex patent portfolios unnecessary and, largely, valueless.

All parties—car makers, autonomous system developers, police and emergency services, city, county, state & federal/national governments, safety organizations, cyber security consultants, etc.—could participate in a central forum to define simple, safe standards that bring autonomous vehicles much closer to being a reality than any could individually.

What Other Benefits Might Come From Cooperation?

The scope for improvement is simply immense, making vehicle control proactive instead of reactive in many cases. Consider some of these possibilities:

- Autonomous vehicles could communicate with each other, synchronizing their activities, such as when changing lanes, turning onto side-roads, joining roundabouts, etc.

- Vehicles could communicate with intersection control systems, notifying them of their intended route. Each intersection, or system of intersections, could thereby control signal timings dynamically to improve vehicle flow and improve fuel efficiency. Such a system could even recommend faster, or more economical, alternative routes to approaching vehicles.

- Emergency vehicles could signal vehicles ahead of them of their intended routes, giving vehicles plenty of time to make way, shortening journey times and saving lives.

Sadly, I can’t see it happening. I simply don’t see the various autonomous companies collaborating on standards any time soon.

Should Fully Autonomous Vehicles Be Permitted In The Near Future?

Well, it is actually already happening. Waymo announced that they are already doing this, in the Phoenix metro area, back in November of last year. Their technology appears to be the most sophisticated and mature, but I am still skeptical. However, this is only happening in Arizona, which has very lax rules on the technology to encourage more development within the state. (It’s not as well publicised, but Waymo‘s cars can be remotely controlled, so perhaps they are remotely-monitored as well? It wouldn’t surprise me. It’s important that the cars appear to be fully autonomous, to accelerate acceptance of the technology, even if they actually are not. Unless Arizona starts requiring companies to report safety-related disengagements, we shall never know for sure.)

It’s clear that a lot of the other players involved want this too. Some companies have made what are, in my humble opinion, some pretty irresponsible forecasts—much to the largely overawed, uncritical approval of the media—of when they’re going to be able to achieve fully autonomous driving. Tesla, for example, claimed back in October of 2016 that their cars are equipped with all of the necessary hardware for full autonomy, and that their cars would be able to achieve that in 2018. GM announced just a couple of months ago that they will be manufacturing vehicles without human driver controls, such as steering wheels and pedals, as soon as 2019. Meanwhile, in November 2017, Uber placed an order for 24,000 vehicles from Volvo that are to become a fully autonomous fleet of vehicles.

(I should point out that Tesla is obsessed with replacing humans with automation in its factories—one of the reasons it has failed to acheive its production targets on the new Model 3—while Uber is similarly obsessed with eliminating their drivers and replacing them with AI systems. They’re not alone, but there are social implications for replacing all human labour with machines that we should be discussing too.)

The Tesla-related deaths are worthy of further discussion: Tesla‘s Autopilot is technically not a self-driving car technology, since it requires drivers to keep their hands on the wheel and pay full attention; it is more of an advanced form of cruise control. However, Tesla regularly issues marketing that promotes its “Full Self-Driving Hardware on All Cars” (quote retrieved from this link on 3/31/2018) and promises features that blur the limited capabilities of its existing software. Tesla has described some releases of Autopilot as beta-quality software, meaning that it had not yet been adequately tested, yet still encouraged drivers to use it. Together with features such as insane mode and ludicrous mode (which accelerate a car from 0-60mph in as little as 2.8 seconds, demonstrating the acceleration capabilities of an electric engine), this suggests to me that Tesla is more concerned with hyping its products and features than it is with safety.

In particular, Tesla is stretching their credibility when they claim very low accident rates resulting from their technology, promote their alleged self-driving capabiltities and yet still blame their customers when accidents happen.

Clearly, with all the many millions of dollars invested in self-driving car technology, there is a lot of commercial pressure—and, in some cases, political pressure, as in Arizona—to fulfill their goals and sell the resulting products and services. My concern is that these commercial desires should not be at the expense of safety. We urgently need improved, publicly-accessible reporting of autonomous vehicle incidents (including safety-related disengagements), as well as far more rigorous safety standards—and continuous testing—of autonomous vehicles.

What’s your view?